VXLAN BGP evpn with L3Out and VPC

Configure VXLAN BGP evpn with L3OUT and VPC

Theory

With VXLAN you can create Layer 2 Adjancencies over a Layer 3 Network. This increases flexibility in network design since you can span your network over multiple datacenters without paying too much attention on routing and segmentation.

The easiest approach is the flood and learn scenario but you still rely on dataplane learning so its not our first choice.

VxLan BGP evpn is the most popular option. Here the local leaf will learn a new MAC and advertise this mac over BGP evpn as l2route. All the other leafs participating in BGP evpn get the route advertisment rather than flooding the packet out of all ports. You don't rely on broadcast anymore and use PIM to create Multicast trees for your VLans. Instead of using ARP as Endpoint learning protocol, VxLan will encapsulate every packet and use the EVPN control plane, which is basically a routing table for MAC and IP traffic to find the destination.

Finally, when both leaves have the endpoint in their evpn routing table leaf A will encapsulate the packet by assigning the VNI (VxLan ID) and send the packet over a new interface called NVE to Leaf B.

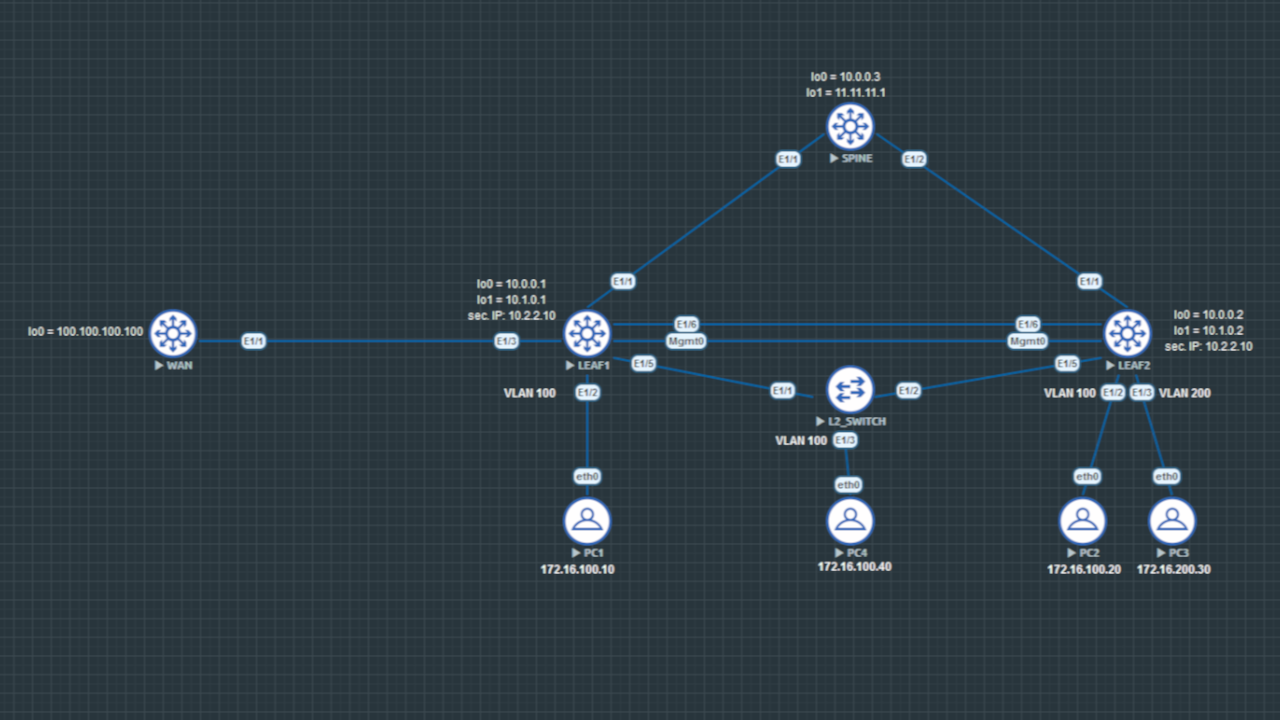

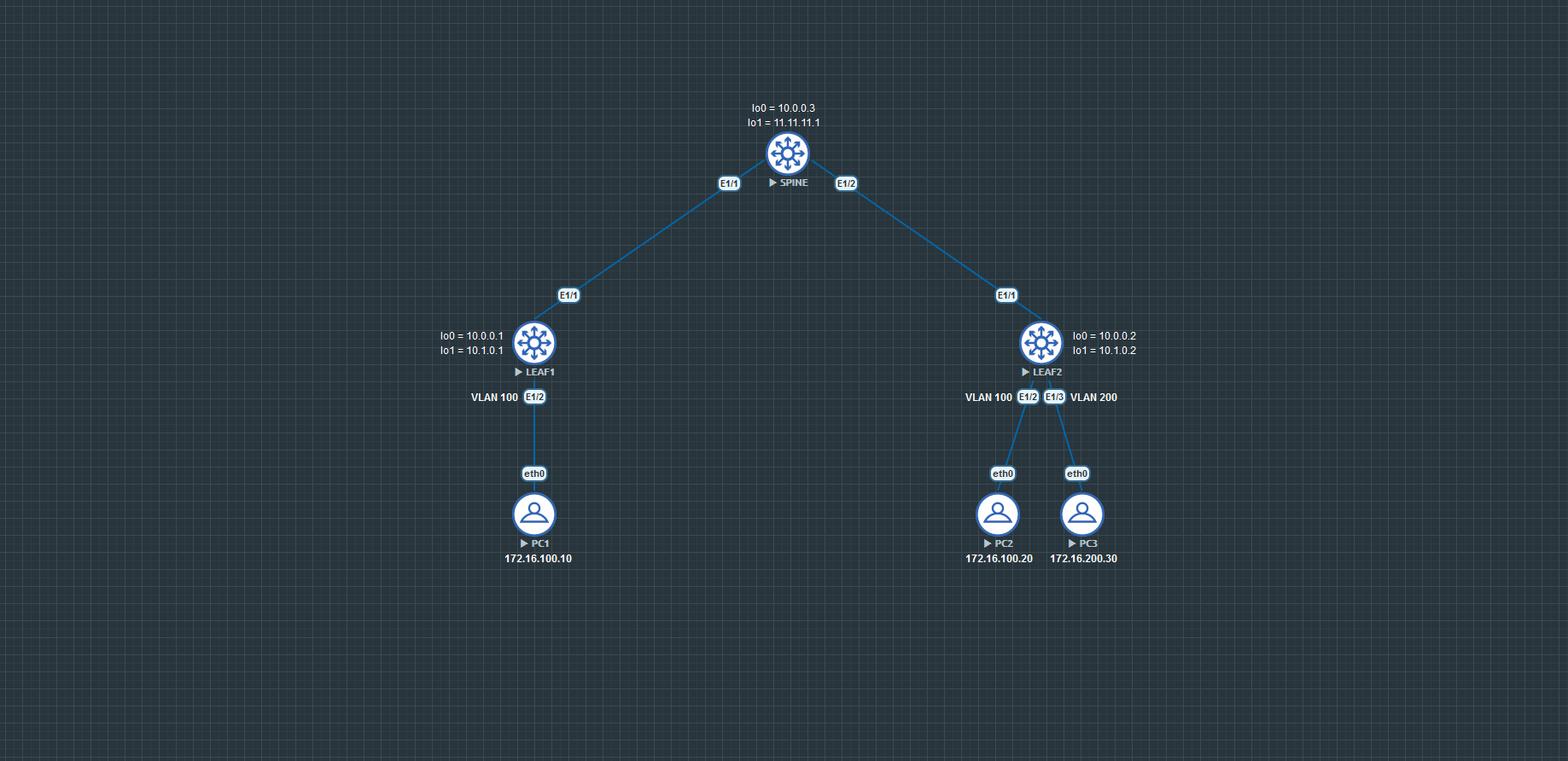

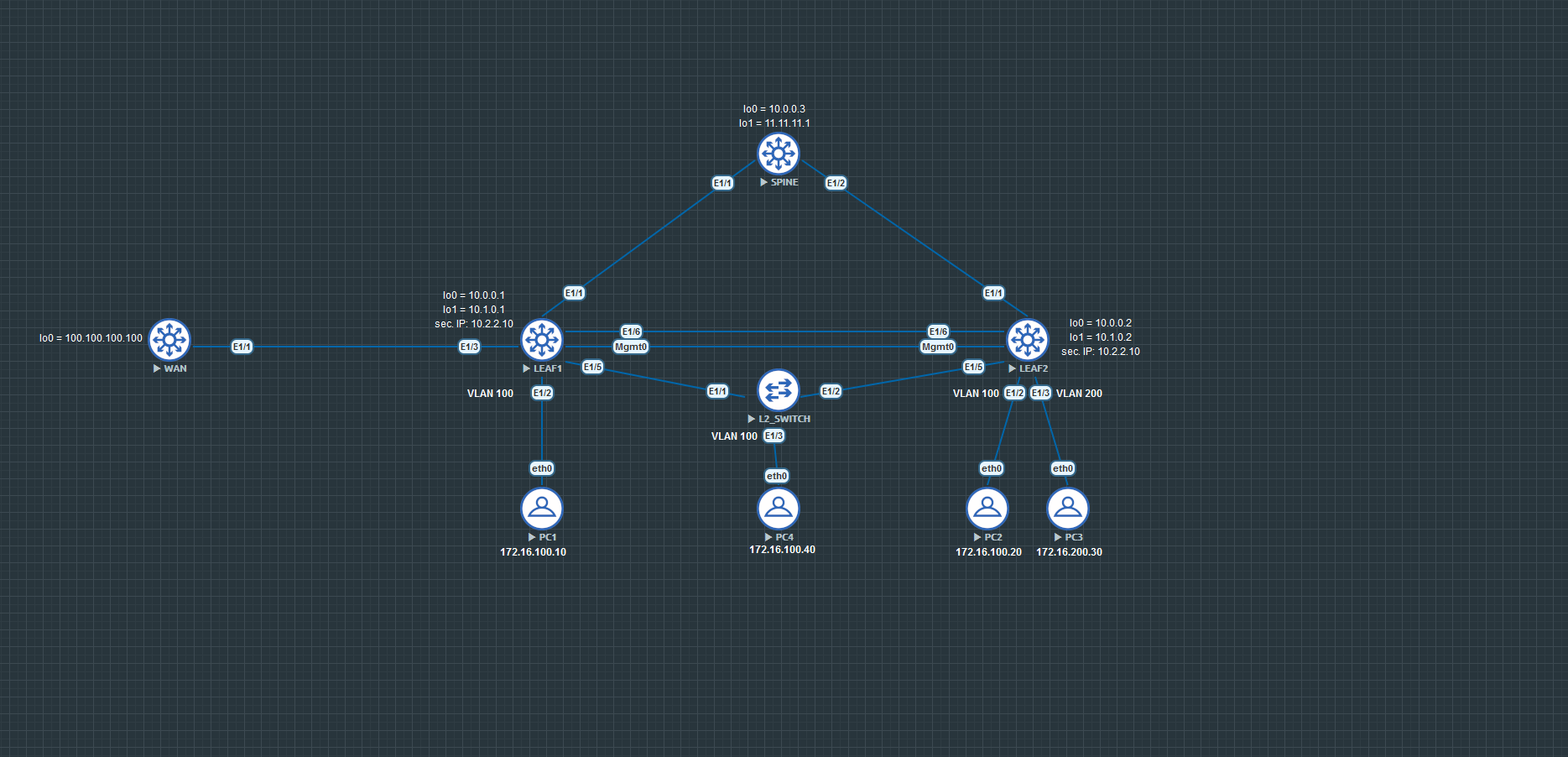

We will first configure VxLan BGP EVPN with one Spine and two Leaves and after that we will configure L3OUT and VPC. I have done every configuration in this article with the Nexus version nxos.9.2.4.bin.

VXLAN BGP evpn

Underlay routing with OSPF

You can use any IGP routing protocol to configure the underlay routing.

Leaf1

feature ospf

router ospf UNDERLAY

router-id 10.0.0.1

int lo0

desc BGP_EVPN_PEERING

ip add 10.0.0.1/32

ip router ospf UNDERLAY area 0

int eth1/1

desc TO_SPINE1

no switchport

ip add 192.168.1.1/30

ip router ospf UNDERLAY area 0

ip ospf network point-to-point

mtu 9216

no shut

Leaf2

feature ospf

router ospf UNDERLAY

router-id 10.0.0.2

int lo0

desc BGP_EVPN_PEERING

ip add 10.0.0.2/32

ip router ospf UNDERLAY area 0

int eth1/1

desc TO_SPINE1

no switchport

ip add 192.168.2.1/30

ip router ospf UNDERLAY area 0

ip ospf network point-to-point

mtu 9216

no shut

Spine

feature ospf

router ospf UNDERLAY

router-id 10.0.0.3

int lo0

desc BGP_EVPN_PEERING

ip add 10.0.0.3/32

ip router ospf UNDERLAY area 0

int eth1/1

desc TO_LEAF1

no switchport

ip add 192.168.1.2/30

ip router ospf UNDERLAY area 0

ip ospf network point-to-point

mtu 9216

no shut

int eth1/2

desc TO_LEAF2

no switchport

ip add 192.168.2.2/30

ip router ospf UNDERLAY area 0

ip ospf network point-to-point

mtu 9216

no shut

Verify OSPF adjacencies with show ip ospf neighbor.

Multicast with PIM

We use PIM to create a Rendevouz Point which will be the spine. The leaf switches will meet there to form multicast trees.

We will configure the RP address manually since we only have one spine. Normally you would use a phantom RP since you have more than one spine that participates as Rendevouz Point. A phantom RP will choose the RP based on the IP with the highest prefix.

Here is an example of a Phantom RP:

rp-address = 11.11.11.2

Spine1 = 11.11.11.1/29

Spine2 = 11.11.11.3/28

Since both Spines have the rp-address in their subnet but Spine1 has a more specific subnet /29 than Spine2 with /28 Spine1 will be our RP. When Spine1 goes down PIM automatically choose Spine2 as new RP. Let's move on with our config.

Spine

feature pim

ip pim rp-address 11.11.11.2 group-list 239.0.0.0/24

int lo1

desc RP

ip add 11.11.11.2/29 #could also be another IP like 11.11.11.1/29

ip router ospf UNDERLAY area 0

ip pim sparse-mode

int eth1/1-2

ip pim sparse-mode

Leaf1 and Leaf2

feature pim

ip pim rp-address 11.11.11.2 group-list 239.0.0.0/24

int eth1/1

ip pim sparse-mode

Verify PIM adjacencies with show ip pim neighbor.

BGP evpn

We will configure the evpn address-family now to create the evpn control plane. Instead of repeating every command for every neighbor I will use templates for certain commands.

Spine

feature bgp

feature nv overlay

nv overlay evpn

feature fabric forwarding

router bgp 65100

log-neighbor-changes

address-family ipv4 unicast

address-family l2vpn evpn

template peer TO_LEAFS

remote-as 65100

update-source lo0

address-family ipv4 unicast

send-community

send-community extended

route-reflector-client

address-family l2vpn evpn

send-community

send-community extended

route-reflector-client

neighbor 10.0.0.1

inherit peer TO_LEAFS

neighbor 10.0.0.2

inherit peer TO_LEAFS

Leaf1 and Leaf2

feature bgp

feature nv overlay

nv overlay evpn

router bgp 65100

log-neighbor-changes

address-family ipv4 unicast

address-family l2vpn evpn

template peer TO_SPINE

remote-as 65100

update-source lo0

address-family ipv4 unicast

send-community

send-community extended

address-family l2vpn evpn

send-community

send-community extended

neighbor 10.0.0.3

inherit peer TO_SPINE

Verify basic IPv4 peering with show ip bgp summary.

Verify BGP evpn peering with show bgp l2vpn evpn summary.

VXLAN

Finally, we configure the VXLAN commands. Since the spine doesn't participate in VXLAN and just reflects the routes we do not have to configure anything VXLAN related on the spine.

The config below is the same for leaf1 and leaf2. For leaf2 you only have to use a different IP for the loopback 1 interface. E.g. 10.1.0.2/32.

It doesn't matter if the gateway is on Leaf1 and Leaf2 since everything is exactly two hops away in a Spine-Leaf Topology. That means we will configure the SVI as gateway on every Leaf and use an anycast-gateway-mac.

For inter-vlan traffic (L3 traffic) we will configure a L3VNI which is basically a separate Vlan that the switch will use if he needs to forward traffic to different VLans.

It is important to configure suppress-arp so you prevent the local leaf from using ARP for intra-vlan forwarding.

For inter-vlan traffic in the VRF section and intra-vlan traffic in the evpn section you have to configure Route-targets which are basically access-lists that tell the leaf which vlan he is allowed to import/export. You can always use route-target both auto when you are in the same AS. I commented the L3VNI out. If you also want to do intervlan routing you can configure the L3VNI part.

Leaf1 und Leaf2

feature vn-segment-vlan-based

feature fabric forwarding

feature interface-vlan

fabric forwarding anycast-gateway-mac 0000.1111.2222 #so every vlan has same gateway mac and ip on every switch

vlan 100

vn-segment 10100

#vlan 200

# vn-segment 10200

#vlan 999

# name L3VNI

# vn-segment 10999

vrf context TNT1

# vni 10999

rd auto

address-family ipv4 unicast

route-target both auto

route-target both auto evpn

interface Vlan100

no shutdown

vrf member TNT1

no ip redirects

ip address 172.16.100.1/24

fabric forwarding mode anycast-gateway

#interface Vlan200

# no shutdown

# vrf member TNT1

# no ip redirects

# ip address 172.16.200.1/24

# fabric forwarding mode anycast-gateway

#int vlan 999

#vrf member TNT1

#ip forward

#mtu 9216

#no shut

evpn

vni 10100 l2

rd auto

route-target import auto

route-target export auto

#vni 10200 l2

#rd auto

#route-target import auto

#route-target export auto

int lo1

ip add 10.1.0.1/32

ip router ospf UNDERLAY area 0

ip pim sparse-mode

ex

#you need the two tcam commands to carv the tcam table. We need to remove space from routing ACLs and give it to the arp-ether so arp suppression will work. Save the config and reload the switch.

hardware access-list tcam region racl 512

hardware access-list tcam region arp-ether 256 double-wide

#show hardware access-list tcam region | grep racl

#show hardware access-list tcam region | grep arp

int nve1 #src interface where packets get sent out to the dest ip which is either the mcast-group IP for BUM traffic or the nve interface on the destination traffic for known unicast traffic

no shut

source-interface lo1

host-reachability protocol bgp

member vni 10100

mcast-group 239.0.0.10

suppress-arp

# member vni 10200

# mcast-group 239.0.0.20

# suppress-arp

#member vni 10999 associate-vrf

int e1/2

switchport access vlan 100

Now create two endpoints and ping between them so the leafs will fill their evpn table. Verify BGP evpn table with show bgp l2vpn evpn and show nve peers. Also verify with show l2route evpn mac-ip all that the PCs are in the Endpoint table. WIth show ip route vrf TNT1 you can see the internal routes between the leaves.

L3OUT

In order to reach external networks that aren't participating in your VXLAN Network you have to select a border leaf and use a route-map to redistribute your VXLAN routes to the external network.

I use OSPF to peer with the external device.

Leaf1

interface Ethernet1/4 #to peer with external network device

no switchport

mtu 9216

vrf member TNT1

ip address 10.14.15.14/24

ip ospf network point-to-point

ip router ospf 1 area 0.0.0.0

no shutdown

route-map permit-bgp-ospf permit 10

route-map permit-ospf-bgp permit 10

router bgp 65100

vrf TNT1

address-family ipv4 unicast

#advertise l2vpn evpn

redistribute ospf 1 route-map permit-ospf-bgp #import ospf 1 routes from WAN device to bgp 65100 so that leaf2 has route in his evpn control plane and can reach 100.100.100.100

router ospf 1

router-id 10.14.15.14

vrf TNT1

redistribute direct route-map permit-bgp-ospf #import direct routes for 172.16.100.0 vlan100 into ospf so you can reach vlan 100 through ospf from your external device

redistribute bgp 65100 route-map permit-bgp-ospf

External device

feature ospf

interface Ethernet1/1

description to Leaf-1

no switchport

mtu 9216

ip address 10.14.15.15/24

ip ospf network point-to-point

no ip ospf passive-interface

ip router ospf 1 area 0.0.0.0

no shutdown

interface loopback 0 #only used to test ping

ip address 100.100.100.100/32

ip router ospf 1 area 0

router ospf 1

router-id 10.14.15.15

On the external device use show ip route ospf to check that the evpn routes are reachable through ospf and on your leaves use sh bgp l2vpn evpn summary to check if the 100.100.100.100 route is reachable through evpn

VPC

Virtual Portchannel is a Cisco Nexus only feature where you can form a portchannel on two different device which is incredibly handy for redundancy. I used a Layer 2 switch that is connected to both leaves for this scenario.

Leaf1 and Leaf2

feature vpc

feature lacp

int mgmt0

vrf member management

ip add 172.16.1.1/24 #used for peer keep-alive packets

vpc domain 10

peer-switch

peer-keepalive destination 172.16.1.2 source 172.16.1.1

peer-gateway

ip arp synchronize

int e1/5

switchport mode trunk

spanning-tree port type network

channel-group 1 mode active

int po1

vpc peer-link

int e1/6

switchport mode trunk

channel-group 2 mode active

int po2

vpc 2

int lo1

ip add 10.2.2.10/32 secondary #used as VTEP IP in VPC, same on both leaves

route-map permit-static-bgp permit 10

router bgp 65100

vrf TNT1

address-family ipv4 unicast

redistribute static route-map permit-static-bgp

address-family l2vpn evpn

advertise-pip #this is important when you have a border leaf that is in VPC with a non-border leaf so you have to advertise the physical IP instead of the VTEP IP (secondary IP) so the other devices know exactly where the border leaf relies

int nve1

advertise virtual-rmac #must be configured when using the command advertise-pip

For Leaf2 the only config difference is that you configure 172.16.1.2 instead of 172.16.1.1.

L2switch

feature lacp

int e1/1

switchport mode trunk

channel-group 2 mode active

int e1/2

switchport mode trunk

channel-group 2 mode active

Verify with show vpc brief if vpc and port-channel has formed.

BGP Evpn Ingress Replication

NVE = VTEP

Ingress replication or headend replication for VXLAN EVPN is deployed when IP multicast underlay network is not used. It is a unicast approach to handle multi destination trafffic. Handling BUM traffic in a network using ingress replication involves an ingress device (The NVE interface) replicating every BUM packet and sending them as a separate unicast to the remote egress devices.

This approach also is used in Cisco ACI Multisite where the VTEPs will replicate every BUM packet and sending them as unicast to the remote VTEP. The VTEPs are basically the NVE interfaces in ACI.

l2vpn evpn

replication-type ingress

l2vpn evpn instance 1 vlan-based

encapsulation vxlan

int nve1

source-interface lo1

host-reachability protocol bgp

member vni 10100 ingress-replication #instead of the multicast config

#show l2vpn evpn evi 1 detail

Thanks for reading my article. If you have any questions or recommendations you can message me via arvednetblog@gmail.com.